The formulation of Maxwell's paradox by James C. The concept of entropy in dynamical systems was introduced by Andrei Kolmogorov and made precise by Yakov Sinai in what is now known as the Kolmogorov-Sinai entropy. Later this led to the invention of entropy as a term in probability theory by Claude Shannon (1948), popularized in a joint book with Warren Weaver, that provided foundations for information theory. Entropy was generalized to quantum mechanics in 1932 by John von Neumann. This idea was later developed by Max Planck. The Austrian physicist Ludwig Boltzmann and the American scientist Willard Gibbs put entropy into the probabilistic setup of statistical mechanics (around 1875). The idea was inspired by an earlier formulation by Sadi Carnot of what is now known as the second law of thermodynamics. The word reveals an analogy to energy and etymologists believe that it was designed to denote the form of energy that any energy eventually and inevitably turns into - a useless heat. The term entropy was coined in 1865 by the German physicist Rudolf Clausius from Greek en- = in + trope = a turning (point). 6 Connections between different meanings of entropy.3.8 The main entropy theorems in ergodic theory.3.7 Interpretation of the Kolmogorov-Sinai entropy of a process.3.5 Properties of the conditional entropy.

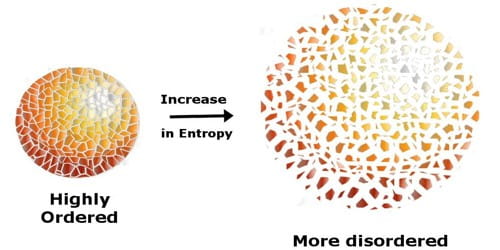

3.3 Properties of the information function and of the Shannon entropy.2.2 Boltzmann entropy and Gibbs entropy - microscopic approach.2.1 Thermodynamical entropy - macroscopic approach.In the common sense, entropy means disorder or chaos.In sociology, entropy is the natural decay of structure (such as law, organization, and convention) in a social system.In the theory of dynamical systems, entropy quantifies the exponential complexity of a dynamical system or the average flow of information per unit of time.a computer file) quantifies the information content carried by the message in terms of the best lossless compression rate. In information theory, the compression entropy of a message (e.g.In probability theory, the entropy of a random variable measures the uncertainty about the value that might be assumed by the variable.In quantum mechanics, von Neumann entropy extends the notion of entropy to quantum systems by means of the density matrix.Entropy is central to the second law of thermodynamics, which states that in an isolated system any activity increases the entropy. In classical physics, the entropy of a physical system is proportional to the quantity of energy no longer available to do physical work.Entropy represents the water contained in the sea. Only the water that is above the sea level can be used to do work (e.g. It is a measure of how organized or disorganized energy is in a system of atoms or molecules.Figure 1: In a naive analogy, energy in a physical system may be compared to water in lakes, rivers and the sea. Thermodynamic entropy is part of the science of heat energy.Information entropy, which is a measure of information communicated by systems that are affected by data noise.

The meaning of entropy is different in different fields. These ideas are now used in information theory, chemistry and other areas of study.Įntropy is simply a quantitative measure of what the second law of thermodynamics describes: the spreading of energy until it is evenly spread. Some very useful mathematical ideas about probability calculations emerged from the study of entropy. The word entropy came from the study of heat and energy in the period 1850 to 1900. A law of physics says that it takes work to make the entropy of an object or system smaller without work, entropy can never become smaller – you could say that everything slowly goes to disorder (higher entropy). The higher the entropy of an object, the more uncertain we are about the states of the atoms making up that object because there are more states to decide from. In this sense, entropy is a measure of uncertainty or randomness. Entropy is also a measure of the number of possible arrangements the atoms in a system can have. The entropy of an object is a measure of the amount of energy which is unavailable to do work.

0 kommentar(er)

0 kommentar(er)